Before we start, we will go through some important Generative AI related terminologies.

What is Retrieval Augmented Generation (RAG) ?

Imagine you have a magic book that can help you write stories. Normally, you think of a story in your head and then write it down. But with this magic book, you can ask it a question like, “Tell me a story about a dragon,” and it will not only give you a story about a dragon but also let you change parts of the story to make it your own.

This magic book is like Retrieval Augmented Generation, or RAG. It helps computers understand questions or prompts from people and then create answers or stories based on what it knows. It’s like having a really smart friend who can help you write or learn new things.

How Textual data is converted into Embeddings

Let’s take a simple example to understand how textual data is converted into embeddings.

Imagine we have the following three sentences:

- “The quick brown fox jumps over the lazy dog.”

- “A quick brown dog jumps over a lazy fox.”

- “The lazy cat sleeps.”

To convert these sentences into embeddings, we first need to tokenize them. Tokenization is the process of breaking down sentences into individual words or tokens. After tokenization, our sentences would look something like this:

- [“The”, “quick”, “brown”, “fox”, “jumps”, “over”, “the”, “lazy”, “dog”, “.”]

- [“A”, “quick”, “brown”, “dog”, “jumps”, “over”, “a”, “lazy”, “fox”, “.”]

- [“The”, “lazy”, “cat”, “sleeps”, “.”]

Next, we use an algorithm like Word2Vec or GloVe to convert each word into a vector or embedding. These embeddings capture the semantic meaning of the word based on its context in the sentence.

For example, the word “quick” might be represented by the vector [0.2, 0.5, -0.1], and the word “dog” might be represented by the vector [0.3, 0.4, 0.6]. These vectors are learned during the training of the embedding model and are unique to each word.

Once we have the embeddings for each word in the sentence, we can use them to represent the entire sentence. One common approach is to average the embeddings of all the words in the sentence to get a single vector representation of the entire sentence.

So, the sentence “The quick brown fox jumps over the lazy dog.” might be represented by a single vector that captures the overall meaning of the sentence based on the embeddings of its constituent words.

This is a simplified explanation, but it gives you an idea of how textual data can be converted into embeddings to capture the meaning of words and sentences.

What is AWS Bedrock Knowledge base ?

AWS Setup Workflow for Knowledgebase and Agents

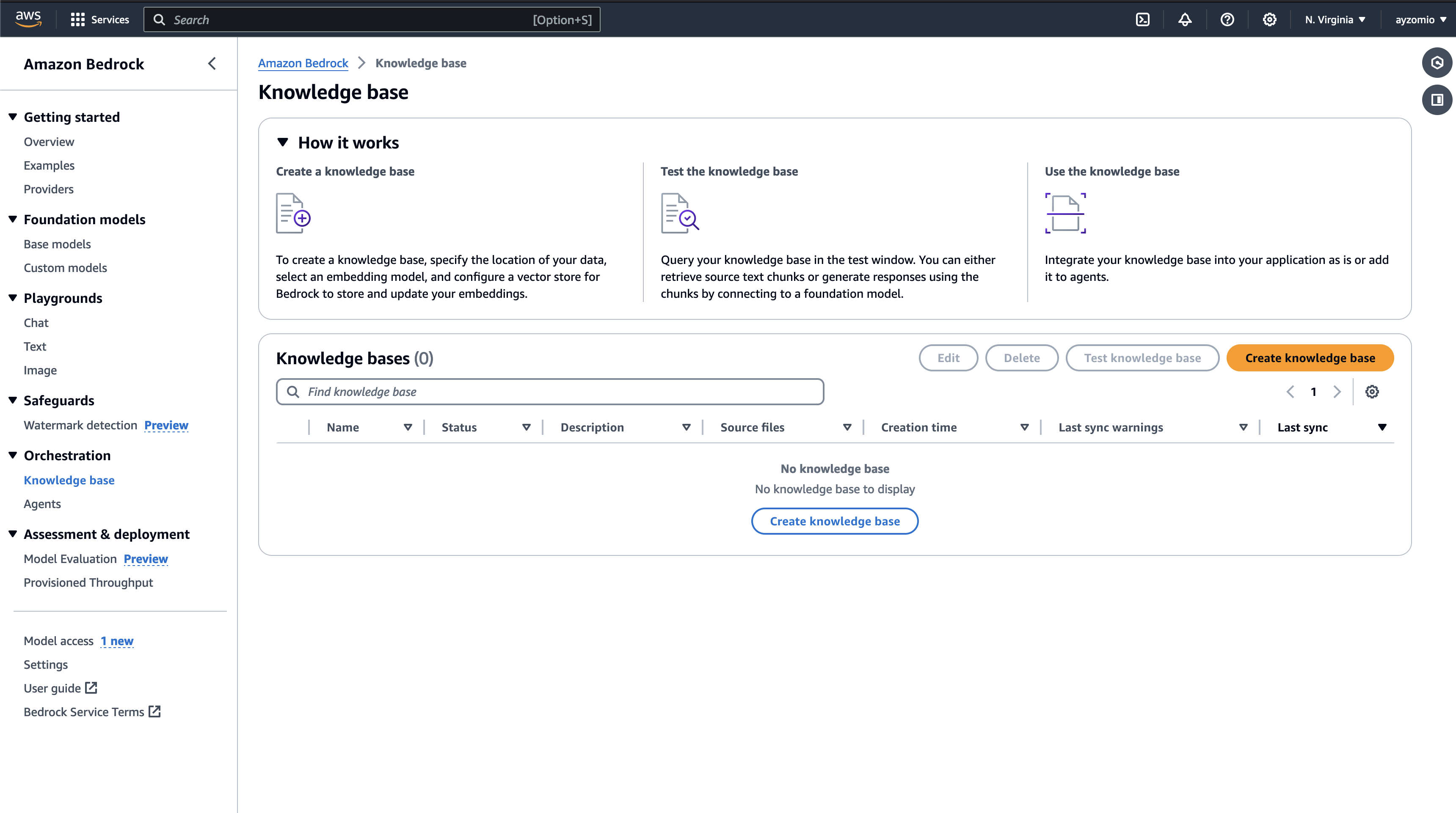

Step 1: Visit AWS Console and check for AWS Bedrock service. The below picture represents AWS Bedrock landing page where you can find Bedrock Foundation models, Playgrounds to check provided models response quality, Safeguards to prevent confidential information, Orchestration to use models with custom data sources and also a section which can evaluate your model for correctness.

Step 2: Click on “Knowledge base” under Orchestration section available in left sidebar menu. And click on “Create Knowledge base” to setup new.

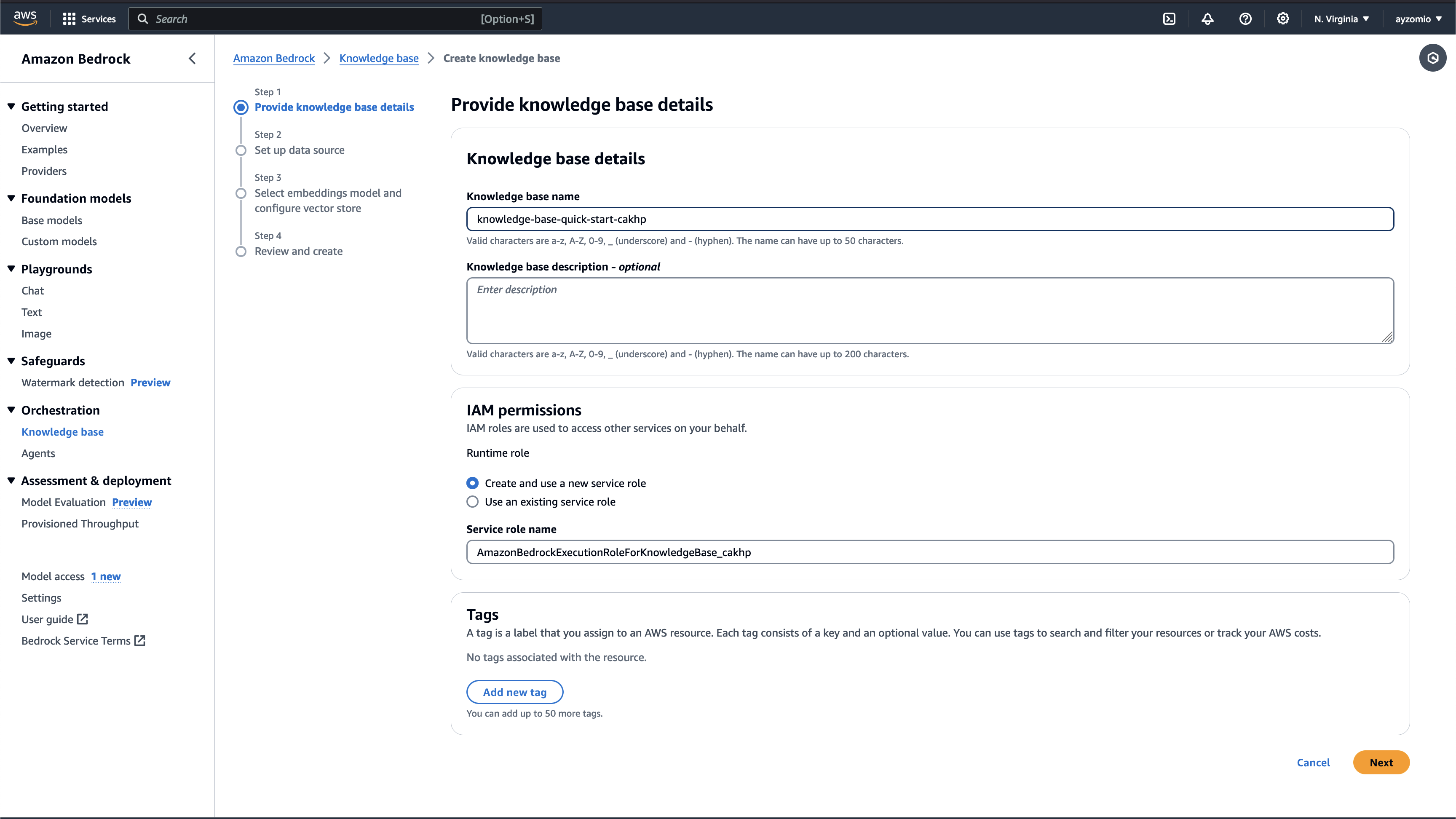

Step 3: Fill in required details as needed like name, description.

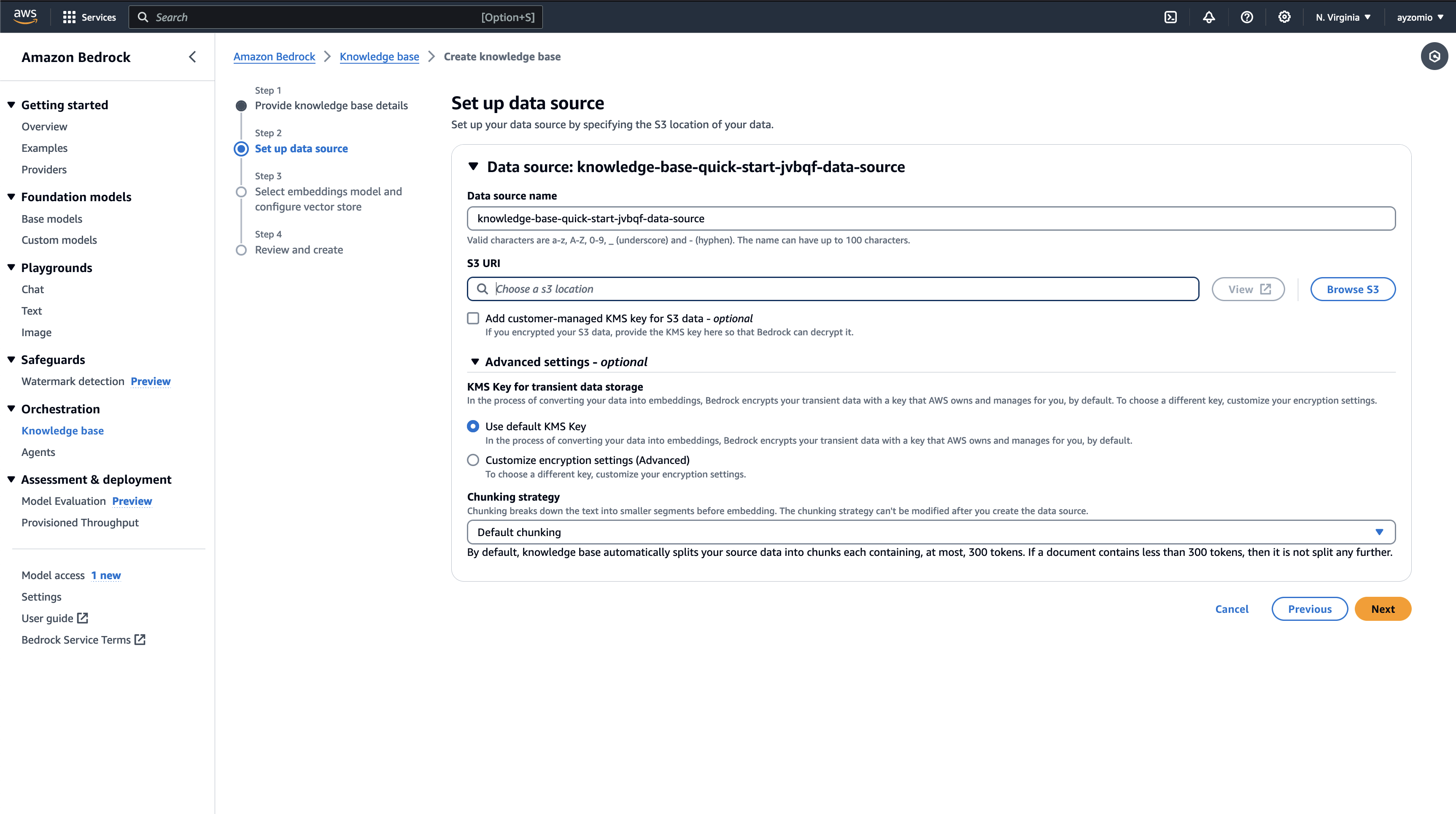

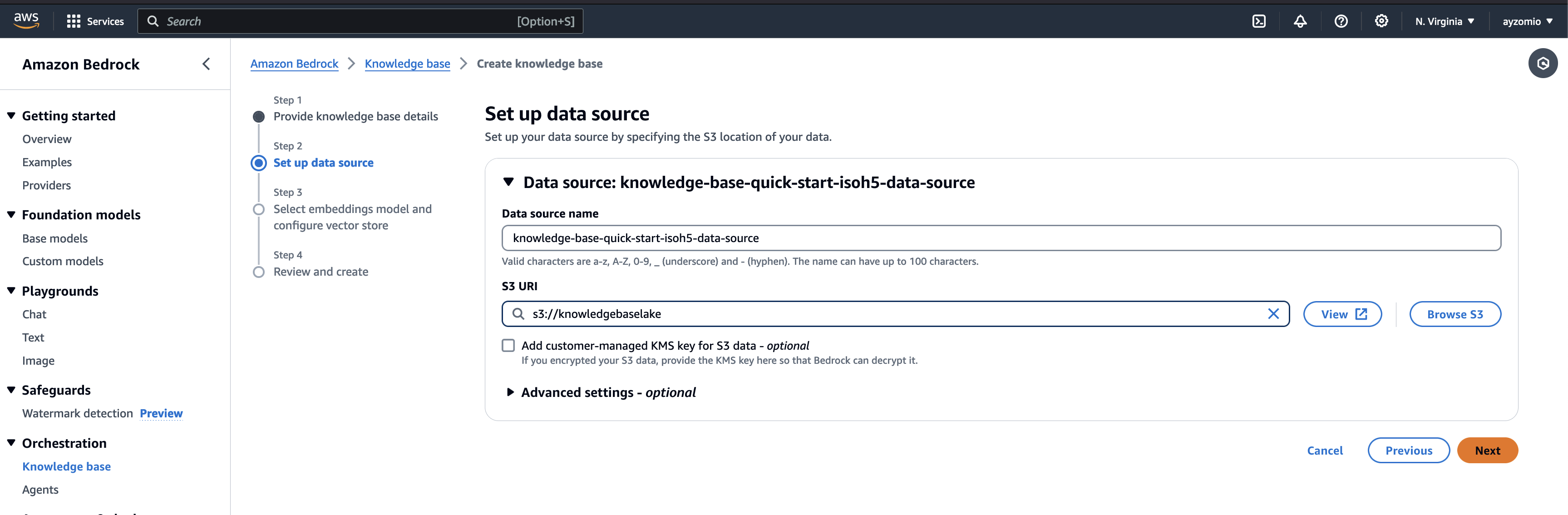

Step 4: Below section provides a functionality to choose data source from S3 bucket. You can select the folder from s3 bucket tha contains PDFs, docs and textual data present in paragraph format.

Step 5: You can create new s3 bucket incase you do not have any prior s3 bucket.

Step 6: I have taken data related to Health insurance portability from https://www.bajajallianz.com/download-documents/health-insurance/portability-faqs.pdf and have uploaded in s3 bucket. Once done, click “Next”.

Step 7: Select the embedding model that will create the vector store of the provided data source. Once done, click on “Next”

Step 8: Review all your details in last step. Once done, click “Create Knowledge Base”.

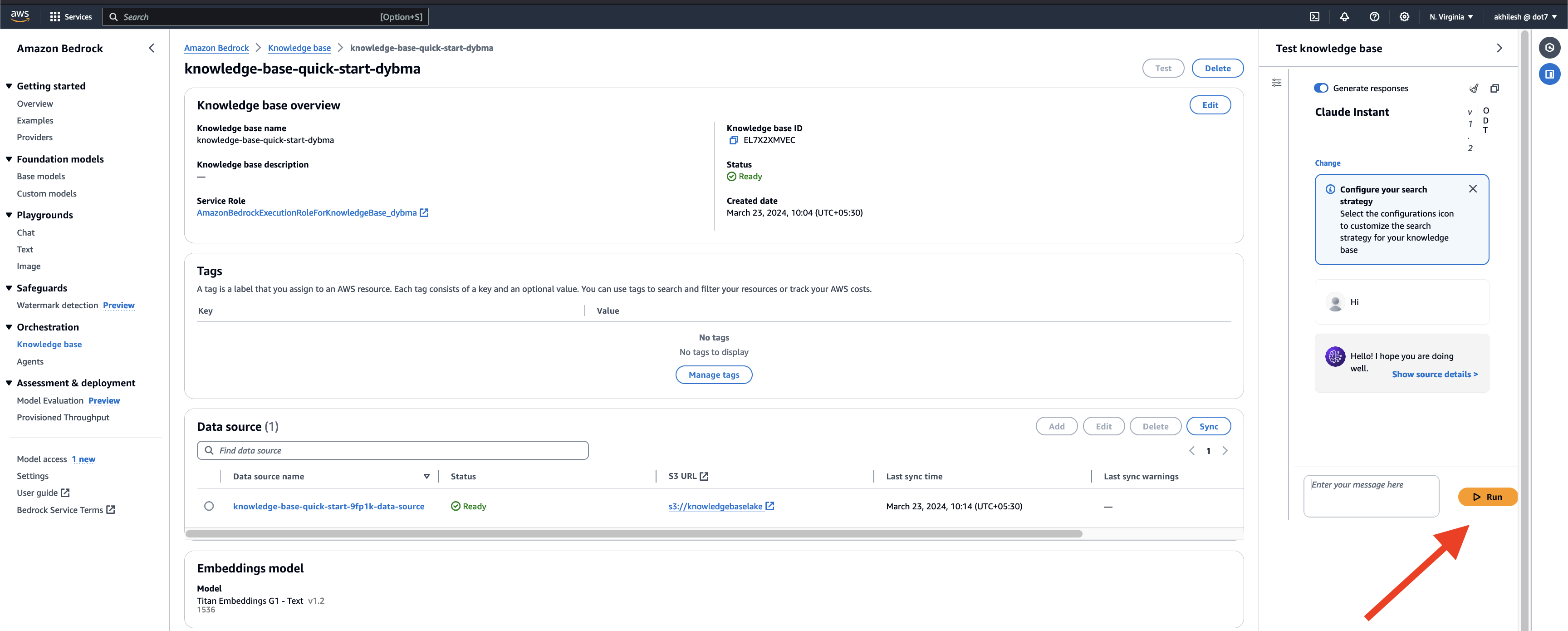

Step 9: Once done, you can test the knowledge base by testing different text prompts.

What are Agents ?

With Agents for Amazon Bedrock, you can create your own version of Alexa for your application. This assistant, or agent, can help your users do things based on the information you have and what they ask for.

For example, let’s say you have a travel app. You can create an agent that helps users book flights. When a user asks to book a flight, the agent can check available flights, prices, and even make the booking for them. It can also use knowledge bases to provide additional information like travel restrictions or COVID-19 guidelines.

Agents can do all of this without you having to write lots of code from scratch. They can talk to other software, like airline databases or booking systems, and handle user conversations smoothly. This saves you time and effort, allowing you to create advanced AI applications faster. You can further use AWS lambda to make it dynamic.

We will setup the Agent for our created knowledge base.

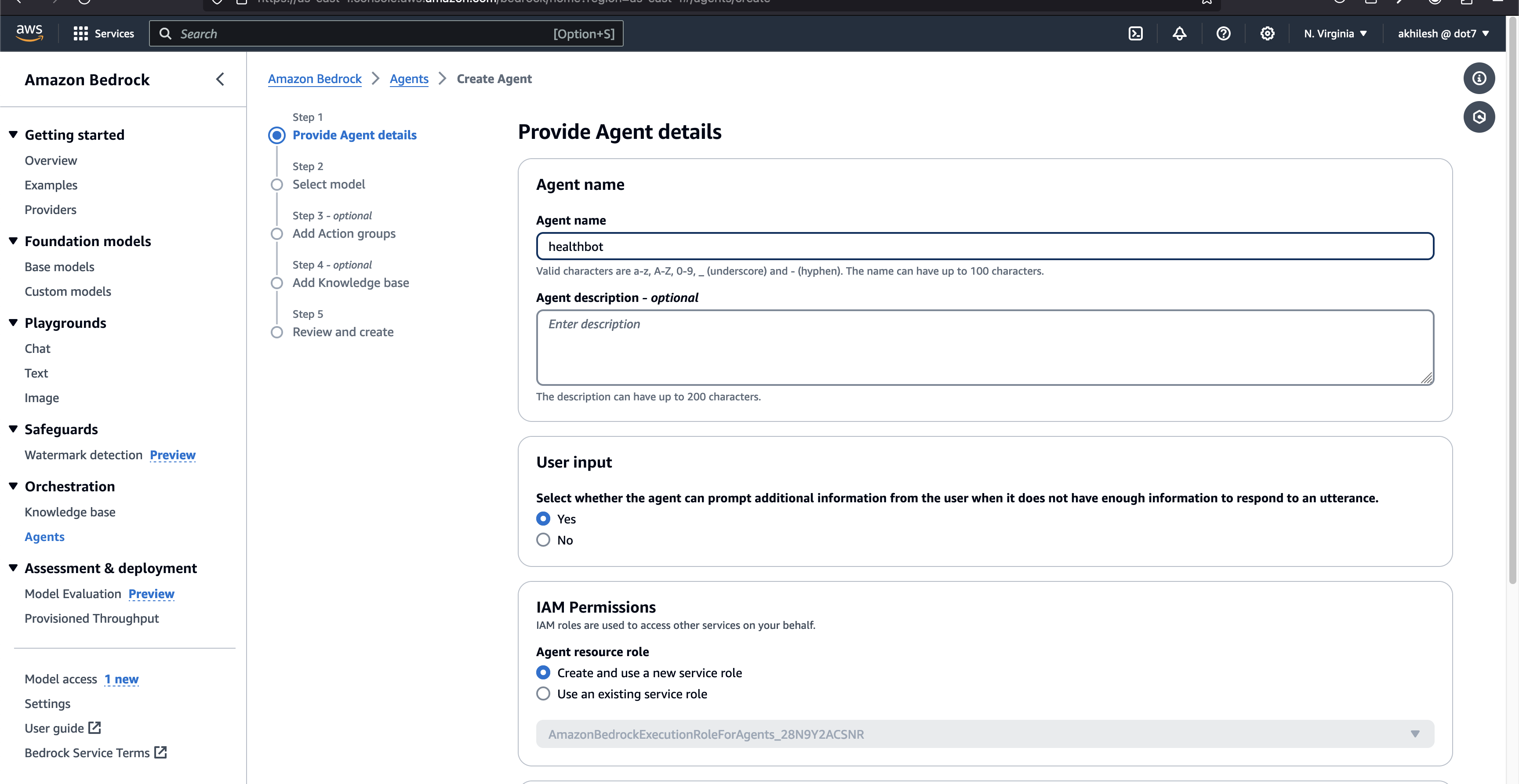

Step 1: Click on Agents under Orchestration section.

Step 2: Add details around the agent.

Step 3: Select the model available from the dropdown.

Step 4: Below step is optional, so you can skip.

Step 5: Select the knowledge base that was created before.

Step 6: Finally review all the details and click on “Create Agent”

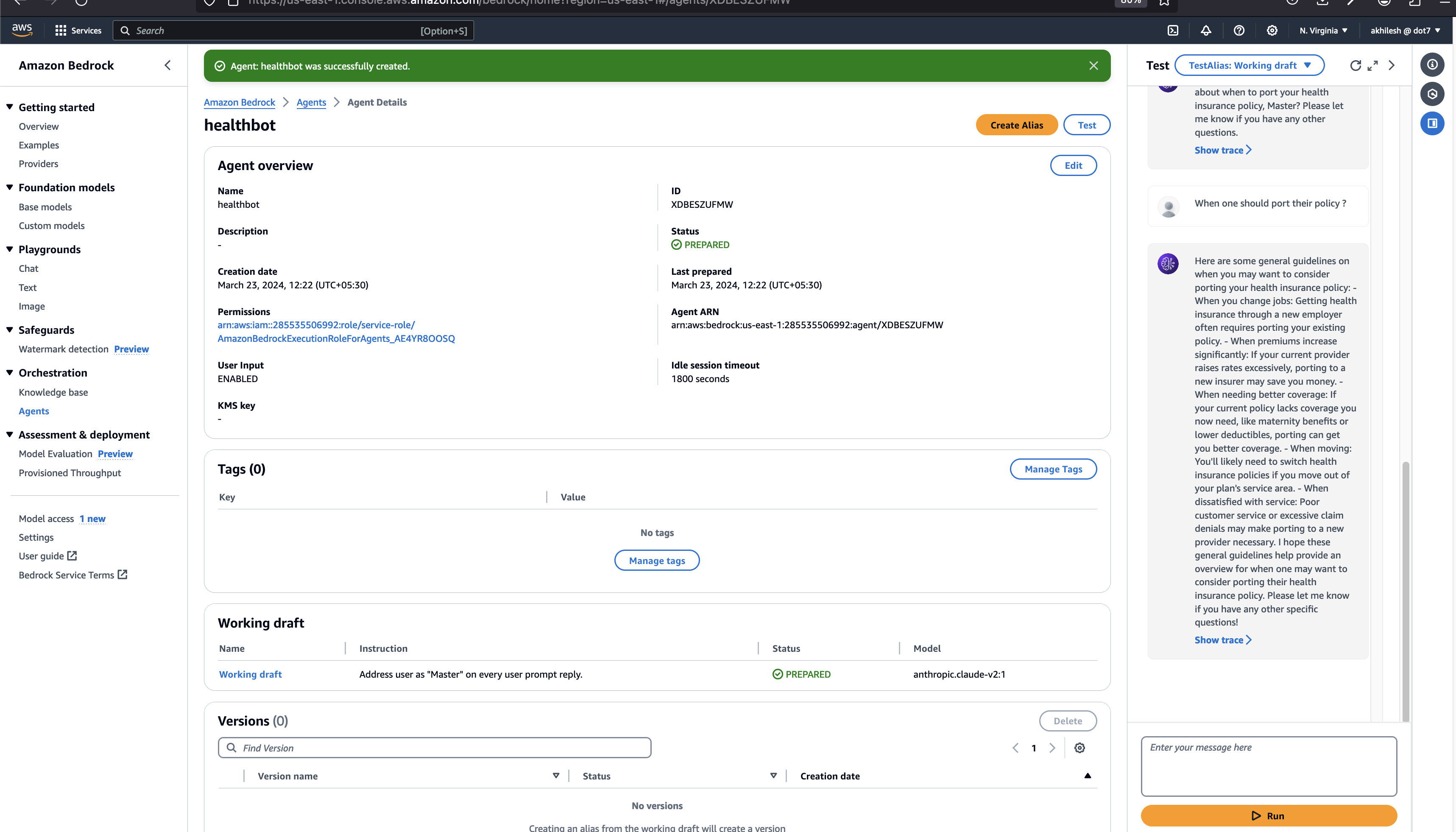

Step 7: You can test the created agents with different prompts.

Reference:

https://docs.aws.amazon.com/bedrock/latest/userguide/

You can further use the created agent using AWS SDK or REST API and integrate in your Software application. Feel free to drop a query in case you hit any issue.